Ever heard of the AI black box problem?

01 / 01 / 2021

The promise of AI is often not as fancy as a fairy tale Some rules are hard to explain, therefore leading to sub optimal results Rule explanation ambiguity – known as the black box problem – is rising to become the next big challenge in the AI world To tackle this issue, data scientists must consider explaining a model’s predictions in a way that is comprehensible to a non technical audience Read our article to know more

Contributors: Jose Maria Lopez, Head of Bus. Dev. Mobile Competence Centre & Innovation, and Minh Le, Head of Connected Vehicle & Emerging IoT Offerings

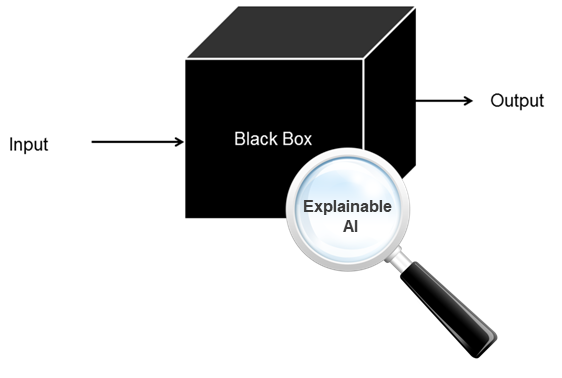

Black Box AI problem

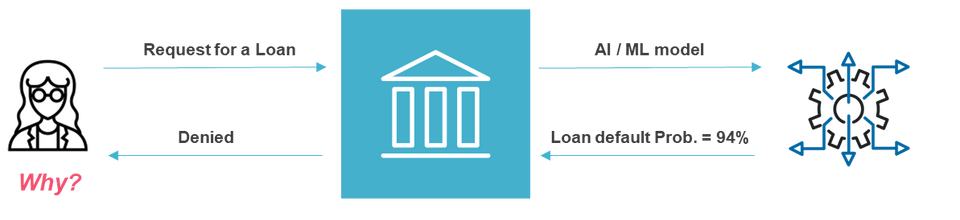

Imagine that you go to your usual bank to request a loan to carry out works in your home, and after a quick analysis on his/her computer, the bank manager informs you that it cannot be approved. You have been a good customer of the bank for the last fifteen years and therefore you don’t understand the decision and you ask for an explanation. However, the bank manager tells you that unfortunately it is the result dictated by the bank’s new Artificial Intelligence algorithm, and s/he cannot explain to you the reason for the rejection. This situation is unfortunately already being played out in real life. In fact, several notorious cases have occurred in the industry recently. For example in 2019 Apple co-founder Steve Wozniak accused Apple Card of gender discrimination through its algorithm, denouncing the fact that the card gave him a credit limit ten times higher than his wife's, despite the fact that the couple shared all their assets.

Artificial Intelligence provides great benefits for organisations, boosting their efficiency and enabling new ways of working. But explainability is extremely crucial in systems that are responsible for decisions and automated actions.

Powerful AI/ML (Machine Learning) models, in particular Deep Neural Networks, tend to be very hard to explain (“Black Box problem”). Sometimes there is the dilemma of having to accept a particular model (a LSTM Neural Network for example) that works much better than another (a simple Logistic Regression), although it is more difficult to understand and explain.

Another major problem is that if there is a bias in the data used to train AI/ML algorithms, then that bias will be present in the decisions that the algorithms make, and this is clearly unacceptable.

The importance of explainable AI

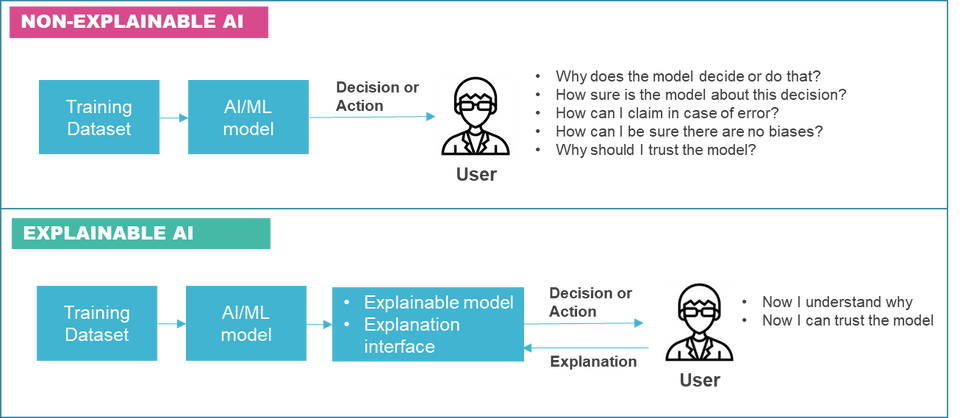

Explainable AI refers generally to methods and techniques that enable an understanding of why an AI/ML model is giving specific results. This understanding of a model’s behaviour is very important for the following main reasons:

- Presenting the model to a non-technical audience with interests in the treated use case.

- Explaining why an individual entry receives a concrete prediction or classification, improving human readability.

- Debugging odd behaviours from the model.

- Controlling the model’s behaviours to avoid discrimination and reduce societal bias.

The inclusion of explainable AI techniques by design in the AI/ML models offers significant benefits to those involved:

Company / Organisation | End Users / Consumers | Regulators |

| Improve understanding | Increase trust | Compliance and regulations |

| Improve performance | Increase transparency | Provide trust and transparency |

| Evaluate the quality of the data used | Understand the importance of their actions | Ensure the proper use of sensible data |

| Avoid bias | Be aware of the data they share | Avoid bias and discrimination |

In order to have the benefits of explicability, Data Scientists must design accurate AI/ML models but also take the challenge to explain the models’ predictions in a way that is comprehensible to a non-technical audience.

Explainable AI is becoming more and more a “must” in the design of AI/ML-powered systems and solutions (see the graph below).

Explainability methods in loan default prediction

Turning to the use case described at the beginning of this article, let's imagine that as a bank we receive loan requests from clients, which could include offering as collateral for the loan some of their properties.

The bank should have in place the necessary mechanisms to:

1) Calculate (AI/ML model) the loan’s associated risk to decide judiciously whether to grant or deny it.

2) Apply explainability methods (explainable model) and provide the relevant tool (explanation interface) to the bank’s clerk allowing him/her to explain the decision (especially in the case of rejection) to the client in an understandable way.

From a technical point of view, there are several Python libraries to implement explainability techniques in Machine Learning. Some of the most popular are PDP, LIME, SHAP or ANCHOR. The typical approach is, from a dataset with several features and the target results (1 = applicant defaulted on loan; 0 = applicant paid loan), as a first step the data scientist tries to find the best model in terms of performance metrics such as Precision, Recall, AUC Score and others. Once achieved, the data scientist can apply the different explainability techniques to the model. Two of these are explained below:

PDP

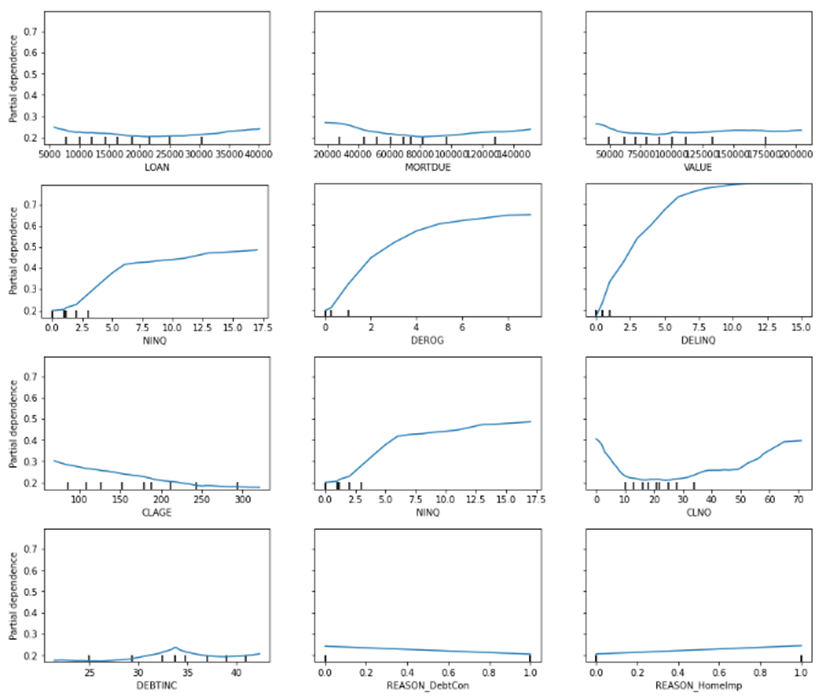

Partial dependence plots (PDP) aim to show the dependence between the target result and the set of features. Intuitively, we can interpret the partial dependence plots as the way to detect the most important features that the model uses in general for the predictions.

The next figure shows that DELINQ (number of delinquent credit lines), DEROG (number of major derogatory reports) and NINQ (number of recent credit inquiries) features are very relevant in the default prediction in this particular model, while other features such as LOAN (amount of the loan request), MORTDUE (amount due on existing mortgage) and VALUE (value of current property) have little relevance.

LIME

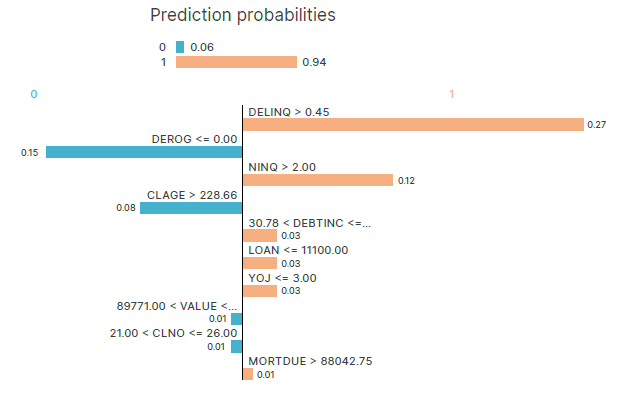

LIME (Local Interpretable Model-agnostic Explanations) illustrates the weightings of the different features in each individual prediction. So a metric is very useful to provide an interpretable reason for a concrete applicant loan rejection.

The next figure shows an example where the main factors predicting a 94% chance of a loan default are the DELINQ (number of delinquent credit lines) and NINQ (number of recent credit inquiries) of this sample entry.

In addition to the Python libraries already mentioned as well as others that exist, some of the big cloud computing providers that offer AI/ML products are already starting to integrate explainable AI tools into their frameworks. As an example, Microsoft Azure Machine Learning or Google Cloud AI Platform already allow the use of several tools for interpretation and explanation in the preparation and evaluation of models.

Explainable AI in finance and the payments industry

Financial institutions have pioneered the adoption of AI/ML algorithms for multiple use cases:

- Fraud detection

- Time series forecasting

- Loan approval

- Credit scoring

- Claims management

- Sentiment analysis-based trading signals

- Asset management

The high availability of data and increasing computing capacity at reasonable costs allow companies in the financial and payments industry to apply Artificial Intelligence at scale and to automate tasks and decisions. Organisations which implement AI/ML in their processes will have a crucial competitive advantage over those who do not.

Ingestion and analysis of large amounts of data, including historical customer data, technical and economic factors, textual information and reports, can provide valuable insights. However, hidden trends within big data are frequently too complicated for human agents to understand, particularly when the dimensionality of the data increases.

Most of the state-of-the art AI/ML models are based in Deep Learning and Deep Reinforcement Learning using more and more complex deep neural networks for time-series analysis, Natural Language Processing, etc. In asset management for example, AI has completely changed the dynamics of investment strategy using “powered-AI” quantitative models.

The great relevance and impact of these activities requires not only high accuracy in the models used but also explainability in the decisions and actions taken by them. This is not only to benefit customers and satisfy regulators; it also makes the operations of the financial and payment institutions more secure.

Download the whitepaper “Harnessing AI to Achieve Hyperautomation in Payments” and learn more about how you can benefit from AI-based services to transform your business today.