Strategizing large Language Model integration in the payment ecosystem.

03 / 05 / 2024

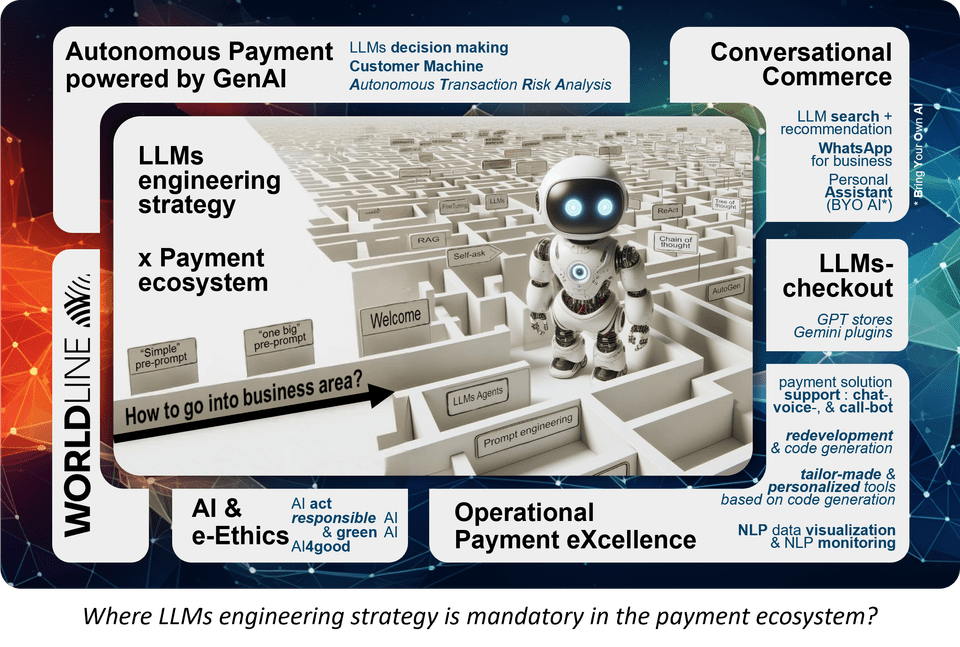

After more than 16 months of extraordinary buzz around ChatGPT, GenAI and Large Language Models (LLMs), it seems clear that while LLMs may not reshape all core payment solutions, they are undoubtedly revolutionising the surrounding payment ecosystem, and we are convinced that our industry needs to prepare for their adoption. GenAI is expected to further modernise the online checkout experience, unlocking a new era of personalised conversational commerce. However, productivity gains will play the most pivotal role in shaping the future of the payments industry. Ultimately, the efficiency and seamlessness of payments operations will determine the long-term success of GenAI and its adoption by payment companies.

Why do you need an LLM Engineering Strategy?

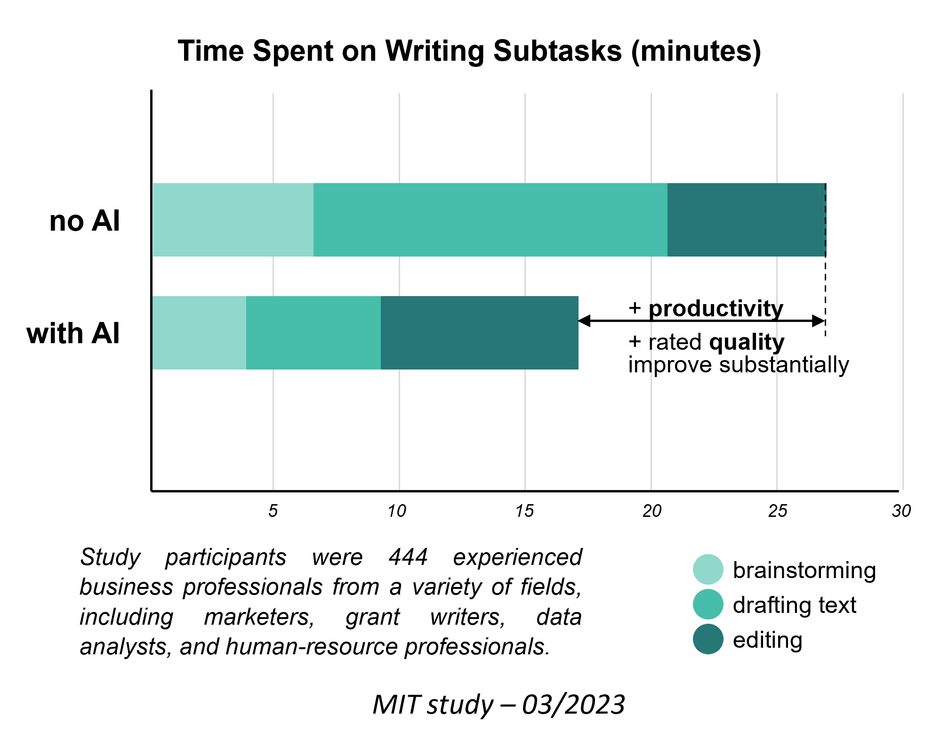

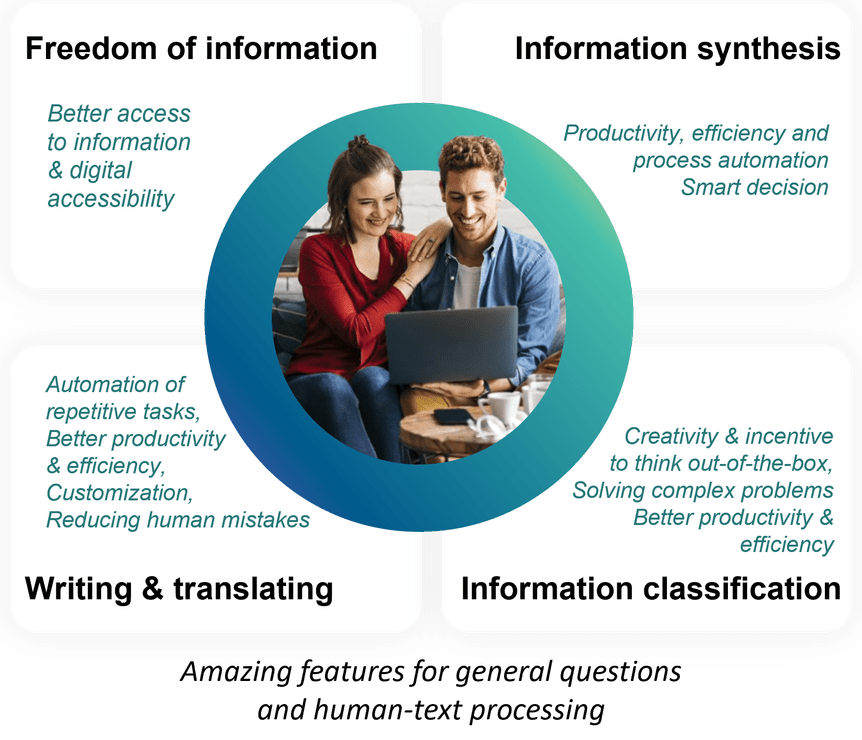

GenAI, and especially LLMs (like ChatGPT), are extraordinary. Some researchers are still studying their remarkable efficiency in "understanding" and "generating" human-like natural language. For any task involving writing text, it really does bring productivity and quality improvements. This is due to a switch in the business professionals’ time allocation: less time spent on cranking out initial draft text and more time spent polishing the final result. Today, with LLMs helping to write and translate text, freedom of information is truly transformed by conversational search, the classification and synthesis of texts is simplified, but developers are also helped with the generation of code…

It is also easy to insert custom instructions (pre-prompts) to tune, adapt, and change the behaviours, style, and tone of the output of the LLMs. But if you go back to the internal principle of LLMs, they are just like your autocompletion available in your mobile phone when you write a text message.

LLMs decoder outputs are “just” generating the next most likely word! LLMs are designed to always give a statically plausible answer, not necessarily an answer that is the truth! LLMs are not deterministic (as exploited to be “creative” - temperature not set to 0) either, and when you integrate a non-deterministic module into a business process, even if it works during your tests and your first steps in production, you cannot prove that it will always give the result you want!

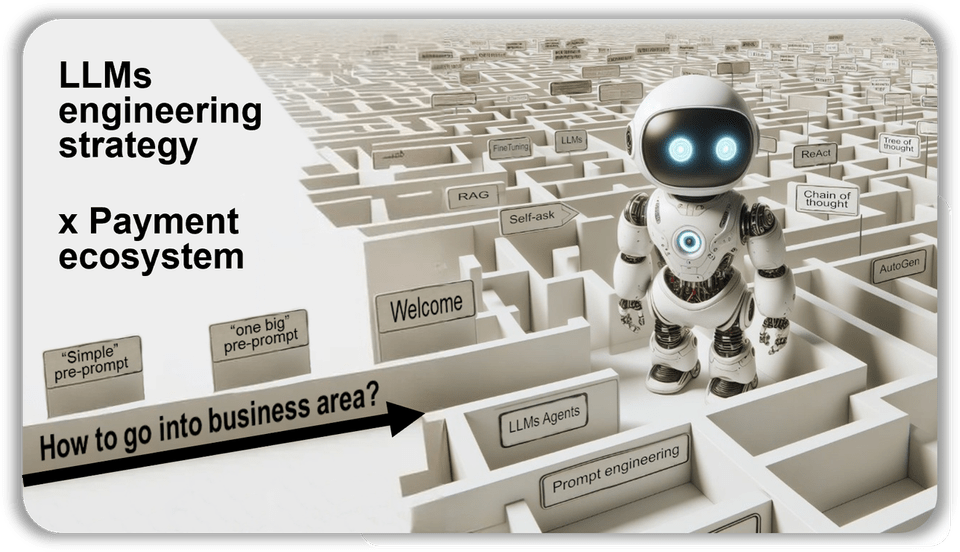

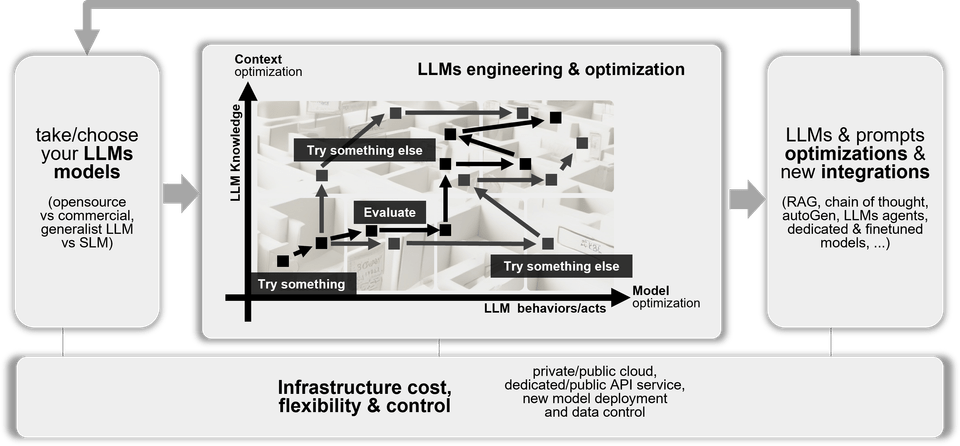

So how can you use LLMs in critical business processes, such as payments? You need to add controls and maximize the LLM performance. But the latter is difficult, and it is not always a linear process. It is like navigating a complex maze, where you have to find a path towards combining all the new engineering approaches to managing and to using LLMs: you need an LLM engineering strategy!

What are the ingredients of a LLMs engineering strategy?

Today, maximising the performance of LLMs requires a multi-dimensional ‘test-and-learn’ strategy. First, you need to choose your LLM model: opt for open-source or commercial models, use Large Language Models (which are general and trained with gigantic knowledge bases) or Small Language Models (specialized to a business or some features). Then you can start the Prompt Engineering process: some techniques optimise the model with the objective of adapting LLM behaviours and actions, other techniques optimise the context and increase LLMs knowledge. Sometimes, the integration of alternative models is necessary to rekindle the engineering process. And, above all, tests, evaluations, and non-regression tests need to be managed. But throughout this optimization process, you absolutely must manage your infrastructure to control costs, maintain flexibility, and ensure the control of your business data, model data, and data created by end-user interactions!

Tactics for LLMs Engineering:

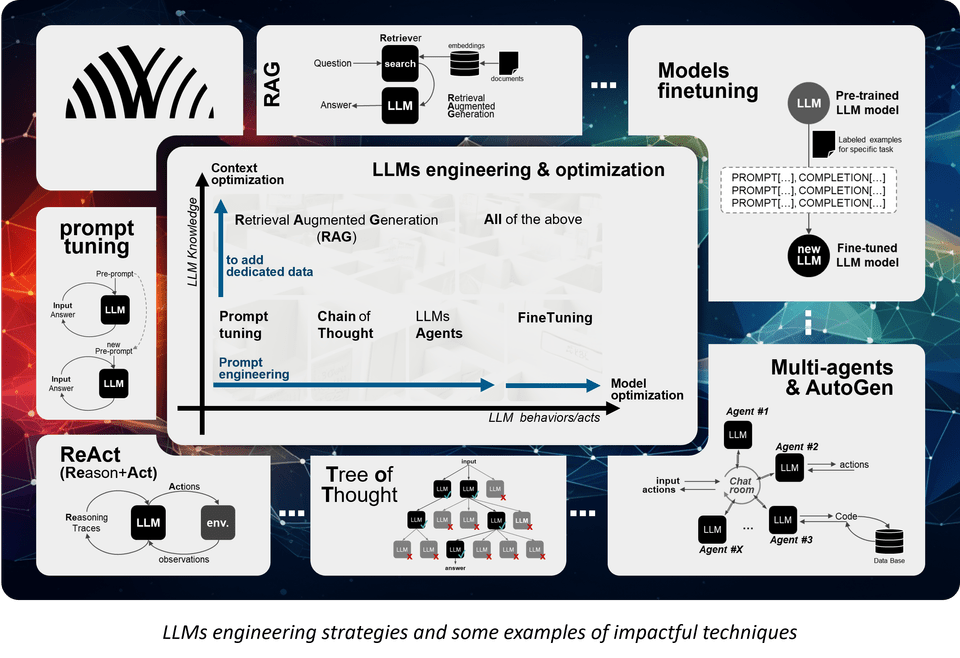

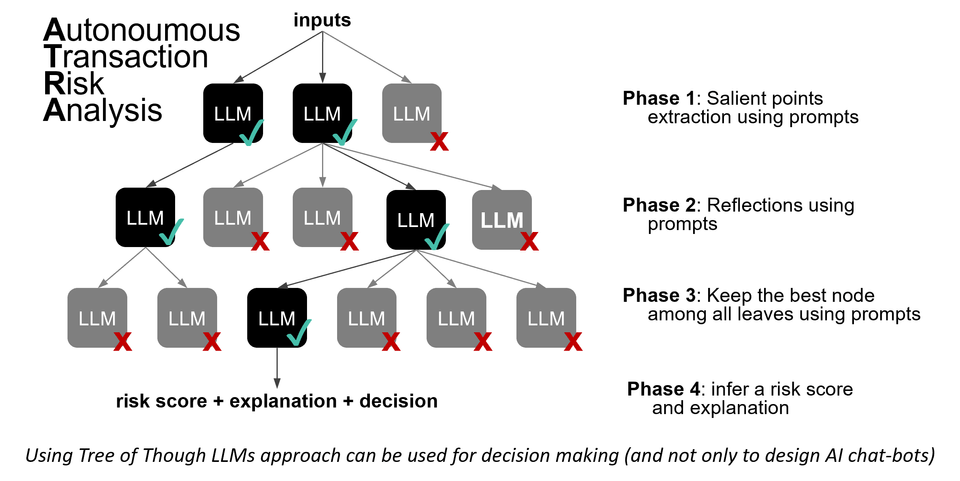

- Prompt Engineering: The simplest example of prompt engineering is adding messages/prompts before your questions such as “you are an expert in high-tech devices, you are a salesman helping a customer, and be proactive”. This involves managing LLM behaviours through advanced techniques like iterative prompts, tools such as ReAct and self-asking strategies. Approaches such as “Chain of Thought” (like tree of thought or plan and solve techniques) help to logically structure responses, while “LLMs Agents” (like multi-agents or AutoGen framework) contribute to autonomously generating content or actions in a directed manner.

- Retrieval-Augmented Generation (also called RAG): This strategy enhances an LLM’s knowledge by allowing LLMs to take into account external knowledge instead of only relying on their internal knowledge. The external knowledge is grounded in factual, and sometimes real-time information. The objective is to enhance the quality and reliability of the responses they generate, particularly for complex questions or tasks that require up-to-date or dedicated business knowledge.

- Fine-Tuning Models: A deep learning process that adjusts LLMs to comprehend not just content but also data formats, helping them to adapt the content but also the behaviours of LLMs. With this approach, you must have lots of data, lots of concrete examples, that you will integrate into the pre-trained model.

- Holistic Approach: Often, the combination of the above tactics is mandatory to tackle the challenges of optimizing LLM performance, which is not straightforward.

Why is an LLMs Engineering Strategy is Crucial in the Payment Sector?

As explained at the beginning of this article, whether by the Boston Consulting Group or Worldline’s Navigating Digital Payment report, GenAI and LLMs in particular could become game changers in many industries, including the payment ecosystem. On the professional side, operational excellence arises from enhanced efficiency tools, automation and well-being. On the consumer side, the GenAI impacts will be and are already important for more natural, accessible, automatic and even autonomous shopping interfaces: this clearly concerns all channels including conversational commerce, but also all new LLM-checkout interfaces and (futuristic) Autonomous Payment powered by GenAI. In parallel to these use cases, it is also essential that your LLMs engineering strategy takes into account the challenges of AI e-Ethics, considering the AI Act, Responsible and Green AI tech, and using AI4good!

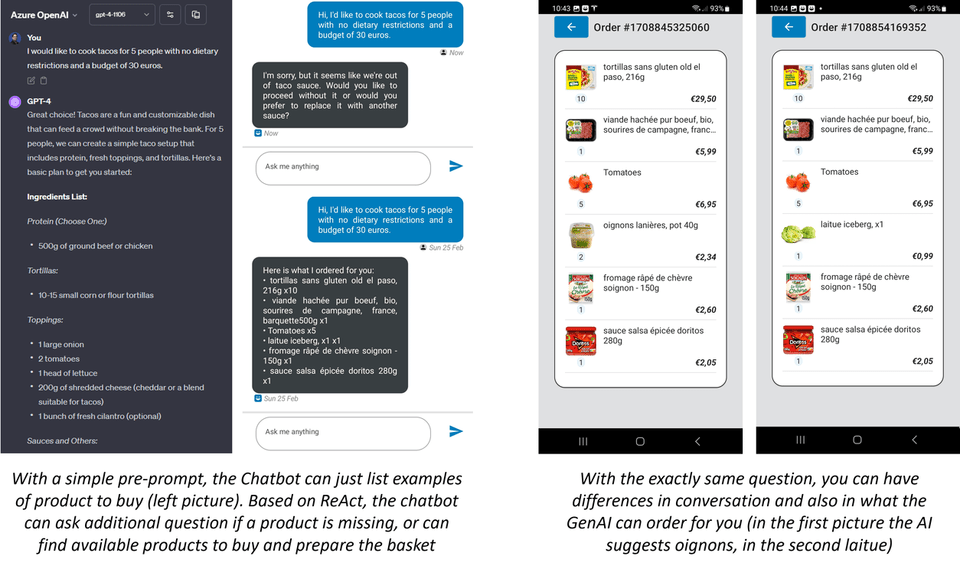

To revolutionise the online checkout experience and conversational commerce, or to optimise payment solutions support (via chatbot, voice-bot, email, or call-bot), you must control the data and the GenAI model deployment when you want to go into production. Based on your LLMs strategy, you can define the perimeter and the context of the answers, manage the behaviours of your LLM, add links with your products or answer database, and try to avoid hallucinations and drifts. What is complex in these previous points, is that you need to strike a balance between strongly defined limits and the desire to give your GenAI answers a certain amount of “freedom” . Here is a concrete example of a shopping chatbot:

If you jump into the near future, can you imagine that your AI personal shopper could help you to choose products and buy them for you? Gartner says machine customers represent one of the biggest new growth opportunities of the decade: A machine customer is a non-human economic actor who obtains goods and/or services in exchange for payment. For Gartner, the machine customer era has already dawned: by 2030, executives believe at least 25% of all consumer purchases and business replenishment requests will be substantially delegated to machines.

So, imagine a conversation with your AI personal assistant… You plan to cook something for your friends for tomorrow night. After suggestions and interactions concerning your budget and some allergies, your AI personal assistant decides to shop and to initiate an Autonomous Payment. This is where the Autonomous Transaction Risk Analysis (ATRA) will be needed. This ATRA solution must analyse the conversation, the basket decision, and the context of the consumer. Using a classical approach based on complex rules cannot deal with such a challenging task. We therefore leverage the power of LLMs to assess such a decision. Here after we provide an example of using LLMs for decision making:

To finish, here are the main short/medium term impacts of GenAI on the payment ecosystem:

Today, dedicated LLMs are already used to help developers to be more efficient, to guide them to add useful comments, to simplify writing documentation, and to optimise their code. In addition, LLMs can be used for code generation: it can help to “re-develop” parts of payment solutions to be more efficient (concerning the cost, the energy consumption, and the ecological impact) using, for example, more recent development languages already known to be less energy-intensive (like the Rust programming language).

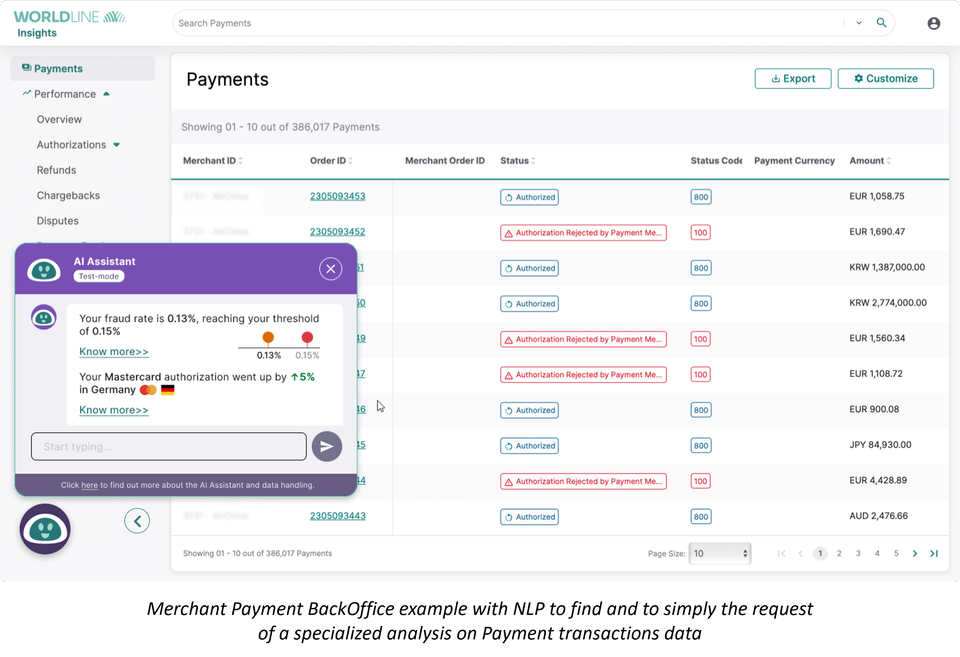

And finally, for merchant back office systems, LLMs can provide tailor-made and personalised portals, including more efficient and intuitive ways to interact with these merchants tools: to be able to find all information without effort, using natural language requests, and conversational interfaces to create data visualisations and statistics never seen and imagined before, using NLP (Natural Language Processing) and conversation to analyse payments data in new ways, using NLP to improve Payment data monitoring.

One more thing

Beyond the ongoing extraordinary buzz around ChatGPT, GenAI and Large Language Models, at Worldline we continue to explore LLMs engineering strategies, studying ways to mitigate hallucination, to improve LLMs knowledge, and to manage LLMs behaviours and actions. Even if LLMs tech are clearly useful to design and deploy a new generation of bots, to integrate conversational commerce (including immersive payment in eCommerce), and to optimize and automate the support of payment platforms, we believe that they can do more than that by starting to investigate how we can use it for code generation and for decision making!

Yet, although an LLMs engineering strategy may be essential, there still remain many exciting challenges to solve to put LLMs in production for business today!

The authors wish to thank the following colleagues for their valuable contributions to this article: Marie-Alice Blete, Yacine Kessaci, Olivier Méry, Colombe Herault, Luxin Zhang, Jekaterina Aleksejeva, Liyun He-Guelton, Johan Maes, and David Daly.

Cyril Cauchois