The impact of Gen AI on Financial Institutions: Creating business value from the start.

06 / 05 / 2024

A nuanced view on the latest generation of AI models: how to tackle their risks and challenges and use them responsibly to generate value for your services and customers from day 1.

Where do we come from and where are we heading?

In Worldline, at the core of the payment industry, we have been working for over a decade on integrating Machine Learning into our products and services. Since 2014, we have been following the many technological evolutions in this interesting domain, creating our own purpose-built AI models for many applications.

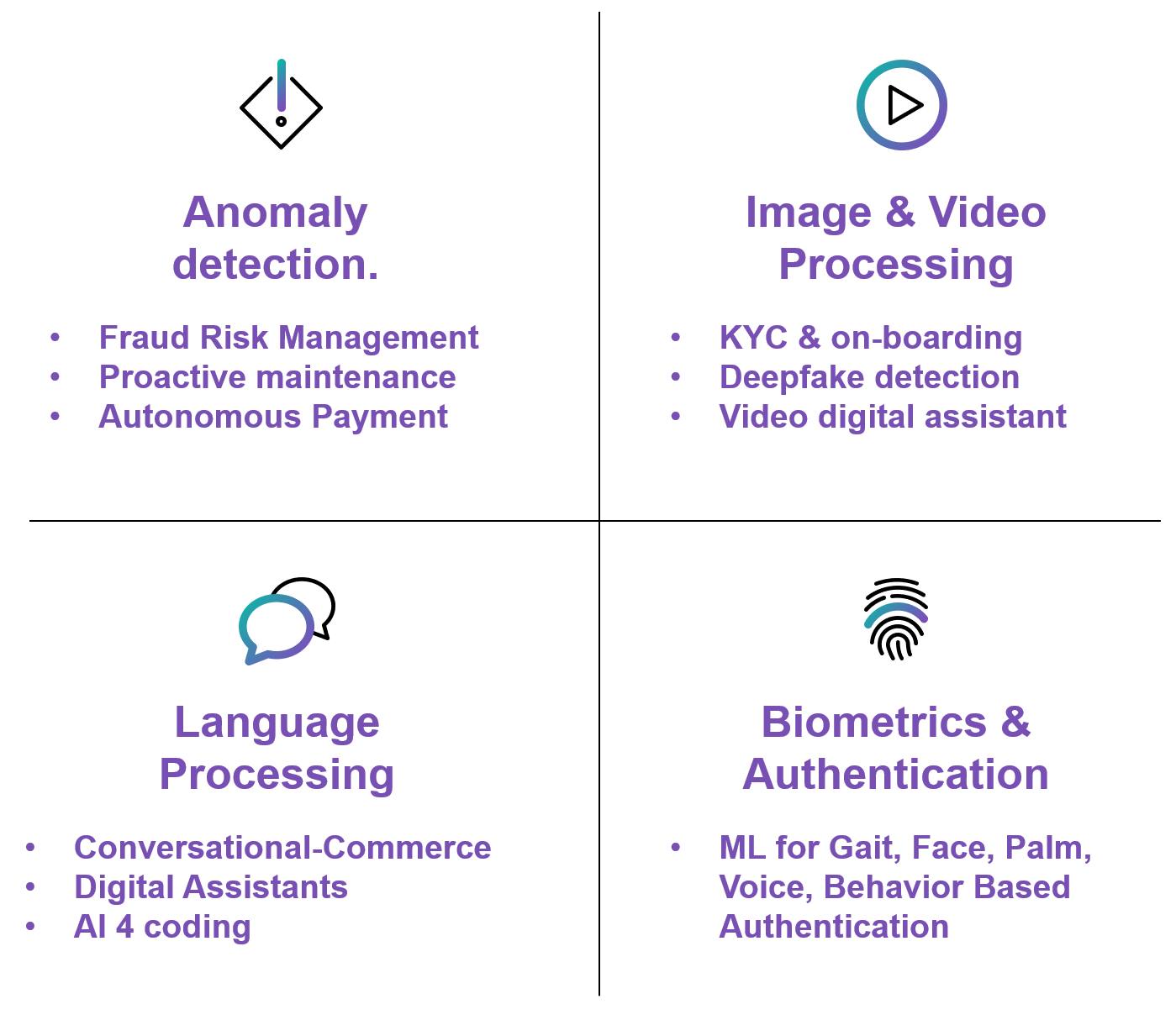

Figure 1 – AI is already being used in many domains.

Fraud Risk Management is powered by multiple AI technologies, many authentication solutions use biometrics, and natural language processing has already brought us digital assistants and conversational self-service solutions.

On top of that, since the end of 2022, Large Language Models like ChatGPT have amazed the world with their capabilities, with new usage seeing the light every day, raising sky high the expectations of its future capabilities. However, in essence, this generative AI is not based on revolutionary new technology. Rather, its capabilities have been unleashed by the size of the training data and the model complexity.

As such, it is opening a vast array of new opportunities for people to be more effective in their jobs and to create more efficient and accurate services. Everybody is starting to use LLM agents as a first step in information gathering or data consolidation and, as part of the new generation of IT toolsets, a decent co-pilot starts to be indispensable in any language driven task, be it document summarisation, text translation or code development.

The downside of today’s generative AI models is the lack of control. Lack of control over the training data that was used by the companies who have created the most performant LLMs. Lack of control on the output generated: the answers produced remain the result of a statistical approach to generate the most likely sentence in a specific context and outputs are still highly sensitive to variations in the exact input formulation.

Such powerful technology calls for responsible usage

Especially in our world of banking and payments, trust is essential. Luckily, in Europe we are front-runners on that matter, with the recent AI act.

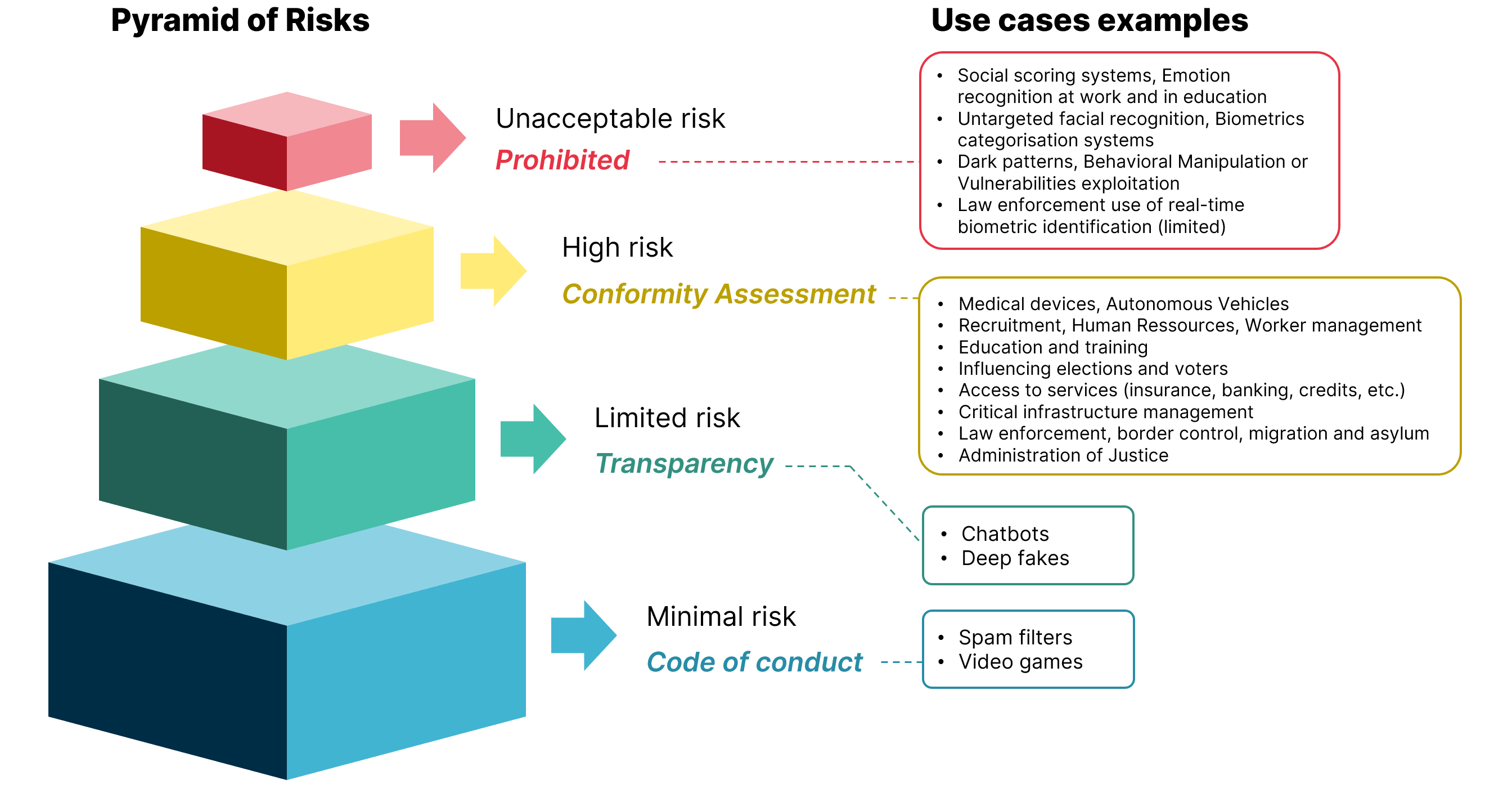

Evidently our intended usage of AI in the financial industry is not part of the prohibited risk category. We do use for example biometrics, but only to increase the level of security and to create more frictionless payments where relevant for the end user.

Figure 2 - AI Risk categories according to the European AI Act.

This regulation will set the baseline for what we all expect from responsible AI: that it will not be used to manipulate us or impose unfair disadvantages to some groups in society.

It may seem obvious that we would not wish AI models that are biased – and yet this is not a given for Large Language Models that are trained on whatever information was used from the Internet. The models will and do inherit the biases of the data they are trained with. But, beyond avoiding bias, making the outcomes of AI models transparent and explainable is equally important.

Why explainability matters

For transparency:

As an end user, if your application for a loan gets rejected because the AI powered credit risk scoring engine gave you a negative score, you have the right to understand what criteria or inputs were used to make that decision.

For efficiency:

If an AI model provides a risk score as an input to fraud investigators, they can work so much more efficiently if they understand why the model produced the higher risk score, which factors were most important, and what where the detected anomalies.

However, responsibly using AI goes beyond avoiding bias and assuring transparency. As with every technological innovation, the first question to ask ourselves is if it is providing sufficient added value to justify the extra complexity and energy consumption it introduces. AI models can indeed be very resource intensive in the training and inference phase. Luckily a lot of work is being done in tuning the software and hardware used for creating and running Machine Learning solutions, making the models smaller, more efficient, and more focused on the specific tasks they are designed for.

If you are interested in a solid approach to optimize your AI models, this post about Green AI on the Worldline engineering blog gives more in-depth information about this subject.

So, how can we create business value from the start?

With a technology so powerful, but so difficult to master – in essence Large Language Models remain just statistical predictions of the next best matching word – it is essential to take great care when integrating it in your business services and processes.

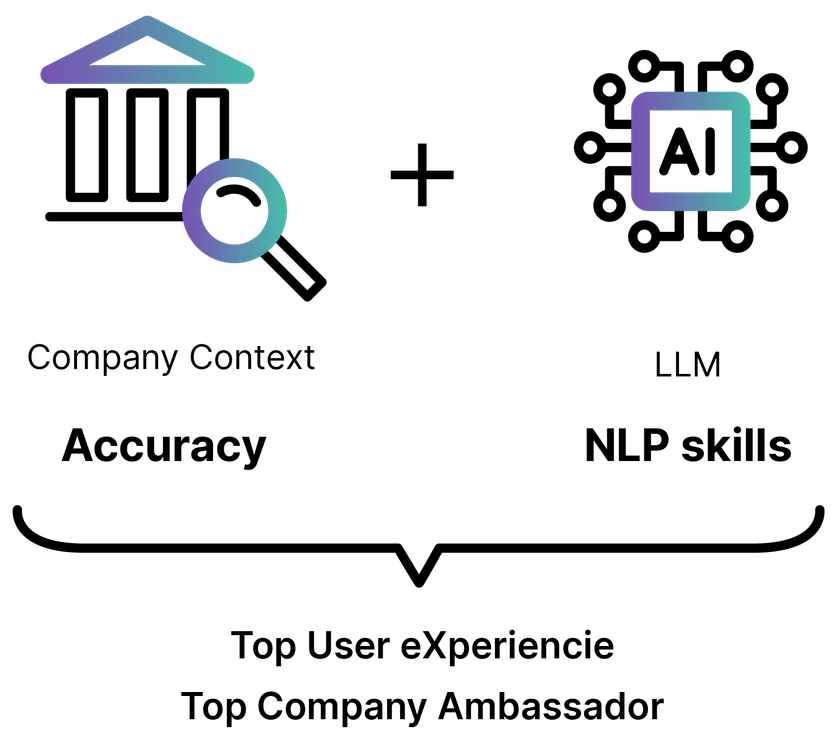

To meet customer expectations, where we all got used to high quality conversations with chatbots like ChatGPT or Gemini, you have no choice but to use the same powerful NLP technology stacks in your own digital channels. But how to make this great conversational tool also your top company ambassador? You will need a combination of prompt engineering, Retrieval Augmented Generation and maybe even finetuning techniques to assure the answers are bound to your company context, in line with roles and limitations defined and using the appropriate corporate communication style. For more on this, you can check out this post about defining an LLM engineering strategy

Figure 3 - Your LLM as top company ambassador.

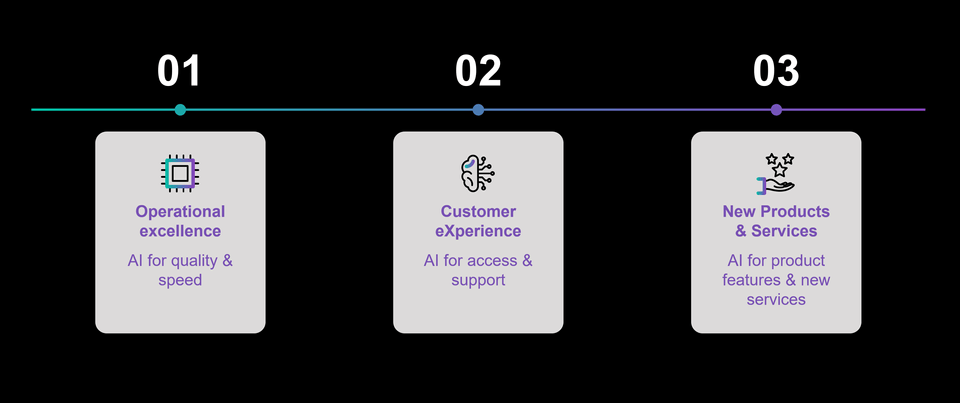

Despite these challenges, we do believe the time is now to start using the latest AI technology to improve operational excellence and elevate the customer experience for your products and services:

“Use the latest AI technology to improve operational excellence and elevate customer experience”

Figure 4 - Start now to integrate generative AI.

- Start by integrating AI tools in your internal processes. Make it a tool for your customer service advisors, so they can be more efficient and accurate in providing qualitative answers to your customers. This way, you use the AI generated content as an input that makes your human experts more effective.

- Use it as an access channel to your products and services. This second step is evident as well, as that’s the essence of LLMs: use it to improve the accessibility of your products, making it easier to find the right information or to trigger the best action. AI is a technology that can really make your products more accessible and inclusive, bringing a better customer experience.

- Rethink your products and services to use the power of the latest AI technology. Doing so you can create better services, generate more value for your customers, and personalise and augment your product experience.

As you move from 1 to 3, the complexity and risks associated with the use of Gen AI increase tremendously, but our solutions are ready to accompany you. Don’t hesitate to contact our product experts who are ready to support you on this exciting and rewarding journey.

Learn how our AI powered solutions can help you boost operational excellence and elevate customer experience: